Financial institutions are working hard to stop a growing trend of fake identity theft made possible by advanced AI technologies. A new poll of 500 scam experts from banks, credit agencies, and other finance companies shows that this quickly spreading threat, which costs lenders tens of billions of dollars yearly, makes them very nervous. These scams use AI to make fake customer identities to open fake accounts and steal money.

This crime is expected to snowball as fraudsters use AI to make more believable fake identities on a large scale. With sophisticated algorithms automatically accepting fake applications, clever fake identities made to get around security measures are getting into financial systems at a rate that has never been seen before. As thieves use AI to improve their methods, institutions work quickly to close any holes in security before the rise of synthetic identity theft leaves them even more open.

Experts say synthetic identity fraud, in which scammers manufacture fictional customers by combining real personal data like Social Security numbers with made-up information, is being turbocharged by the spread of AI tools capable of generating content like text, images and data.

These generative AI programs can help criminals quickly scrape the internet for information, impersonate real people and create trails of digital activity to make their fake customers seem authentic and bypass security checks, according to Ari Jacoby, the founder of Deduce.

"More bad accounts can be created and more success can be had by those bad actors creating these fraudulent accounts," Jacoby said. "And ultimately they're in it to steal money."

The survey results echo urgent warnings in recent months from credit card companies, government agencies and industry analysts about the growing menace of AI-enabled online financial scams especially synthetic identity fraud.

Thomson Reuters in April called synthetic identity fraud "one of the fastest-growing financial crimes" and advised financial firms to increase identity verification requirements to combat it.

Mastercard in July said it is using its own AI tools to trace the movement of money and curb identity fraud. But Ajay Bhalla, the company’s head of cyber intelligence, said criminals “haven’t needed to break any security measures” because banks have found the scams “incredibly challenging to detect.”

State agencies like the California Department of Financial Protection and Innovation have also cautioned that generative AI can be exploited to impersonate people and pull off synthetic fraud.

According to credit reporting agency Equifax, traditional fraud prevention systems often fail to detect synthetic identity fraud. Scammers frequently target young people and seniors most vulnerable to such online financial scams.

To counter these schemes, financial institutions need advanced AI capabilities to flag suspicious patterns in massive datasets, said Jacoby. His company uses AI to hunt for irregularities that reveal fraudulent activity.

“The very technology that empowers us may also imperil us,” said consultant Nick Shevelyov, a former Silicon Valley chief security officer. “The technologies used to defend against this are getting better, but also just the proliferation of false identities are also increasing.”

While AI-enabled security tools hold promise, everyday consumers also need to be vigilant about protecting their personal data to avoid falling prey to fast-moving, AI-boosted online financial scams, experts say.

Criminals Leverage Generative AI to Manufacture Fake Identities

One major factor fueling the rise in synthetic identity fraud is the emergence of generative AI tools that can rapidly produce convincing fake online personas, security experts say.

Generative AI refers to artificial intelligence capabilities that can generate new content like text, images, video or audio from scratch with just a simple prompt.

Powerful generative AI models such as DALL-E 2, GPT-3, and Stable Diffusion allow users to effortlessly create synthetic media, websites, online profiles, and more.

Cybersecurity analyst Samantha Benoit says that fraudsters are now harnessing these off-the-shelf generative AI tools to manufacture fake digital people on an industrial scale.

"With generative AI, criminals can automate the process of creating fake identities with realistic personal details culled from the internet," Benoit said. "This allows them to commit synthetic identity fraud at a massive scale."

From generating profile photos to fabricating browsing histories to mimicking writing patterns - generative AI makes every step of identity fabrication fast and easy.

"It's never been simpler for a scammer to produce everything needed for a believable synthetic identity," Benoit said. "They can churn out thousands of fraudulent online personas in hours."

And these AI-generated identities are intricate enough to evade many fraud detection systems that rely on spotting red flags, she added.

Financial Industry Struggles to Combat Sophisticated Scams

Synthetic identity fraud enabled by generative AI poses a major challenge for banks, lenders and other financial institutions.

According to credit bureau TransUnion, synthetic identity fraud losses in the U.S. totaled $6 billion in 2020 alone.

And a report by the firm Arkose Labs found that up to 20% of online accounts created each day are fake identities used for financial crimes.

The problem, experts say, is that synthetic identities either partially or fully created by AI can appear very convincing and human-like. They are specifically designed to bypass fraud prevention measures.

"Most systems are still tuned to catch basic identity spoofing and impersonation," said financial crime analyst Victor Hong. "But generative AI produces identities that are very difficult to differentiate from real people."

As a result, AI-manufactured fake personas slip past identity checks, credit checks, multi-factor authentication and other safeguards.

"Legacy fraud tools are no match for the power of generative AI in the wrong hands," Hong said. "Financial institutions need new protections powered by AI themselves just to keep up."

Heightened Vigilance Needed to Combat AI-Enabled Fraud

To counter the growing threat of generative AI-powered synthetic identity theft, experts recommend heightened vigilance by financial institutions, law enforcement and consumers.

Banks and lenders need to invest in advanced AI capabilities specifically designed to detect AI-generated fraud and fake accounts, analysts say. Identifying patterns like abnormal account volumes or unusual data flows can help flag mass-produced synthetic identities.

Law enforcement agencies also need to prioritize combating AI-enabled financial crimes, said security expert Alicia Thompson.

"The legal system needs to catch up to the reality of generative AI and its potential for mass-scale criminal exploitation," Thompson said.

But individuals also need to be cautious about protecting their personal data from automated data scraping, which fuels synthetic identity creation, experts emphasize.

"With vigilance and the right safeguards, we can counter this rising AI-driven fraud threat," Thompson said. "But it requires urgent action before losses escalate further."

What is seditious conspiracy, which is among the most serious crimes Trump pardoned?

What is seditious conspiracy, which is among the most serious crimes Trump pardoned?

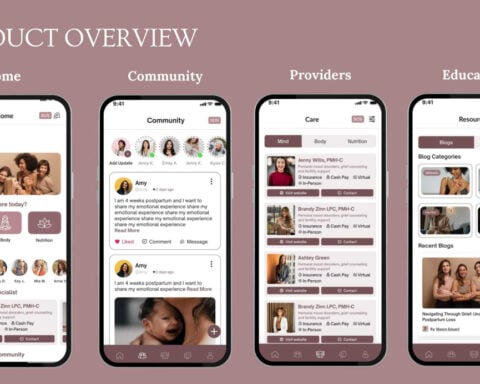

Savannah women brings hope and help with new Maternal Mental Health app

Savannah women brings hope and help with new Maternal Mental Health app

Amazon to close 7 warehouses in the Canadian province of Quebec and eliminate 1,700 jobs

Amazon to close 7 warehouses in the Canadian province of Quebec and eliminate 1,700 jobs

House prepares to pass immigrant detention bill that would be Trump's first law to sign

House prepares to pass immigrant detention bill that would be Trump's first law to sign

Lewis Hamilton says driving a Ferrari F1 car for first time was 'exciting and special'

Lewis Hamilton says driving a Ferrari F1 car for first time was 'exciting and special'

The head of a federal agency for consumers has packed up his office. But will Trump fire him?

The head of a federal agency for consumers has packed up his office. But will Trump fire him?

Meagan Good says goodbye to 'Harlem,' hello to her future with Jonathan Majors

Meagan Good says goodbye to 'Harlem,' hello to her future with Jonathan Majors

K-9's retirement party was everything a dog could want

K-9's retirement party was everything a dog could want

Why some Instagram users aren’t able to unfollow Trump and JD Vance

Why some Instagram users aren’t able to unfollow Trump and JD Vance